Next: Reconstruction with Rectified Cameras Up: Three-Dimensional Reconstruction Previous: Three-Dimensional Reconstruction

|

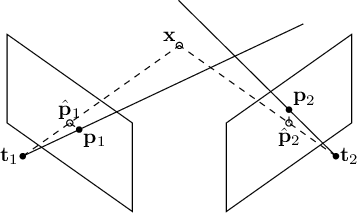

Observing figure 9.2, it is easy to infer that the solution to the triangulation problem is the intersection point of the epipolar lines generated by the two images. This problem can be easily extended to the case of

Due to the inaccuracies in identifying homologous points (a separate discussion could be made regarding calibration errors), the lines formed by the optical rays are generally skewed. In this case, it is necessary to derive the closest solution under some cost function: the least squares solution is always possible with

Every optical ray subtended by the image pixel

|

(9.11) |

indicates the direction of the optical ray in world coordinates.

The unknowns are the world point to be estimated

indicates the direction of the optical ray in world coordinates.

The unknowns are the world point to be estimated  and the distances along the optical axis

and the distances along the optical axis  .

.

The closed-form solution, limited to the case of only two lines, is available in section 1.5.8. This technique can be applied to the case of a camera aligned with the axes and the second positioned relative to the first according to the relationship (9.3).

Exploiting the properties of the cross product, one can arrive at the same expression using perspective projection matrices and image points, expressed in homogeneous coordinates:

![\begin{displaymath}

\left\{

\begin{array}{l}

\left[ \mathbf{p}_{1} \right]_{\tim...

...right]_{\times} \mathbf{P}_n \mathbf{x} = 0

\end{array}\right.

\end{displaymath}](img1685.svg) |

(9.12) |

![$[\cdot]_{\times}$](img1686.svg) the cross product written in matrix form.

Each of these constraints provides three equations, but only two are linearly independent. All these constraints can ultimately be rearranged into a homogeneous system in the form

the cross product written in matrix form.

Each of these constraints provides three equations, but only two are linearly independent. All these constraints can ultimately be rearranged into a homogeneous system in the form

is a matrix

is a matrix  with

with  being the number of views in which the point

being the number of views in which the point  is observed.

The solution to the homogeneous system (9.13) can be obtained using singular value decomposition.

This approach is referred to as Direct Linear Transform (DLT) by analogy with the calibration technique.

is observed.

The solution to the homogeneous system (9.13) can be obtained using singular value decomposition.

This approach is referred to as Direct Linear Transform (DLT) by analogy with the calibration technique.

Minimization in world coordinates, however, is not optimal from the perspective of noise minimization. In the absence of further information about the structure of the observed scene, the optimal estimate (Maximum Likelihood Estimation) is always the one that minimizes the error in image coordinates (reprojection), but it requires a greater computational burden and the use of nonlinear techniques, as the cost function to be minimized is

|

(9.14) |

where

where  is the projection matrix of the i-th image (see figure 9.2).

is the projection matrix of the i-th image (see figure 9.2).

It is a non-convex nonlinear problem: there are potentially multiple local minima, and the linear solution must be used as the starting point for the minimization.

Another class of techniques, which leverage the information derived from epipolar constraints and thereby allow for the estimation of the positions of noise-free points without the need to derive the three-dimensional point, is presented in section 9.4.4.

Paolo medici